Introduction

This tutorial is an adaptation of https://vulkan-tutorial.com to use Rust instead of C++. The majority of the credit for this tutorial should go the author of the original tutorial (Alexander Overvoorde) and the other contributors.

This tutorial also includes several additional chapters that are original creations of the author of this adapted tutorial (starting with the Push Constants chapter). These chapters introduce important Vulkan concepts and features that will be useful in almost any Vulkan application. However, as noted in the disclaimer for those chapters, they should be considered experimental.

About

This tutorial will teach you the basics of using the Vulkan graphics and compute API. Vulkan is a new API by the Khronos group (known for OpenGL) that provides a much better abstraction of modern graphics cards. This new interface allows you to better describe what your application intends to do, which can lead to better performance and less surprising driver behavior compared to existing APIs like OpenGL and Direct3D. The ideas behind Vulkan are similar to those of Direct3D 12 and Metal, but Vulkan has the advantage of being cross-platform and allows you to develop for Windows, Linux and Android at the same time (and iOS and macOS via MoltenVK).

However, the price you pay for these benefits is that you have to work with a significantly more verbose API. Every detail related to the graphics API needs to be set up from scratch by your application, including initial frame buffer creation and memory management for objects like buffers and texture images. The graphics driver will do a lot less hand holding, which means that you will have to do more work in your application to ensure correct behavior.

The takeaway message here is that Vulkan is not for everyone. It is targeted at programmers who are enthusiastic about high performance computer graphics, and are willing to put some work in. If you are more interested in game development, rather than computer graphics, then you may wish to stick to OpenGL or Direct3D, which will not be deprecated in favor of Vulkan anytime soon. Another alternative is to use an engine like Unreal Engine or Unity, which will be able to use Vulkan while exposing a much higher level API to you.

With that out of the way, let's cover some prerequisites for following this tutorial:

- A graphics card and driver compatible with Vulkan (NVIDIA, AMD, Intel)

- Experience with Rust

- Rust 1.88 or later

- Some existing experience with 3D computer graphics

This tutorial will not assume knowledge of OpenGL or Direct3D concepts, but it does require you to know the basics of 3D computer graphics. It will not explain the math behind perspective projection, for example. See this online book for a great introduction of computer graphics concepts. Some other great computer graphics resources are:

- Ray tracing in one weekend

- Physically Based Rendering book

- Vulkan being used in a real engine in the open-source Quake and DOOM 3

If you want a C++ tutorial instead, see the original tutorial:

https://vulkan-tutorial.com

This tutorial uses the vulkanalia crate to provide access to the Vulkan API from Rust. vulkanalia provides raw bindings to the Vulkan API as well as a thin wrapper over said bindings to make them easier and more idiomatic to use from Rust (more on this in the next chapter). This means that while you should never have any difficulty in determining exactly how your Rust programs are interacting with the Vulkan API, you will be shielded from little of the danger and verbosity of the Vulkan API.

If you want a Rust Vulkan tutorial that uses a crate which provides a safe and relatively concise wrapper around the Vulkan API (vulkano), see this tutorial:

https://github.com/bwasty/vulkan-tutorial-rs

Tutorial structure

We'll start with an overview of how Vulkan works and the work we'll have to do to get the first triangle on the screen. The purpose of all the smaller steps will make more sense after you've understood their basic role in the whole picture. Next, we'll set up the development environment with the Vulkan SDK.

After that we'll implement all of the basic components of a Vulkan program that are necessary to render your first triangle. Each chapter will follow roughly the following structure:

- Introduce a new concept and its purpose

- Use all of the relevant API calls to integrate it into your program

- Abstract parts of it into helper functions

Although each chapter is written as a follow-up on the previous one, it is also possible to read the chapters as standalone articles introducing a certain Vulkan feature. That means that the site is also useful as a reference. All of the Vulkan functions and types are linked to the either the Vulkan specification or to the vulkanalia documentation, so you can click them to learn more. Vulkan is still a fairly young API, so there may be some shortcomings in the specification itself. You are encouraged to submit feedback to this Khronos repository.

As mentioned before, the Vulkan API has a rather verbose API with many parameters to give you maximum control over the graphics hardware. This causes basic operations like creating a texture to take a lot of steps that have to be repeated every time. Therefore we'll be creating our own collection of helper functions throughout the tutorial.

Every chapter will also start with a link to the final code for that chapter. You can refer to it if you have any doubts about the structure of the code, or if you're dealing with a bug and want to compare.

This tutorial is intended to be a community effort. Vulkan is still a fairly new API and best practices haven't been fully established. If you have any type of feedback on the tutorial and site itself, then please don't hesitate to submit an issue or pull request to the GitHub repository.

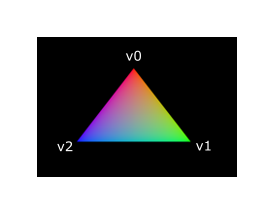

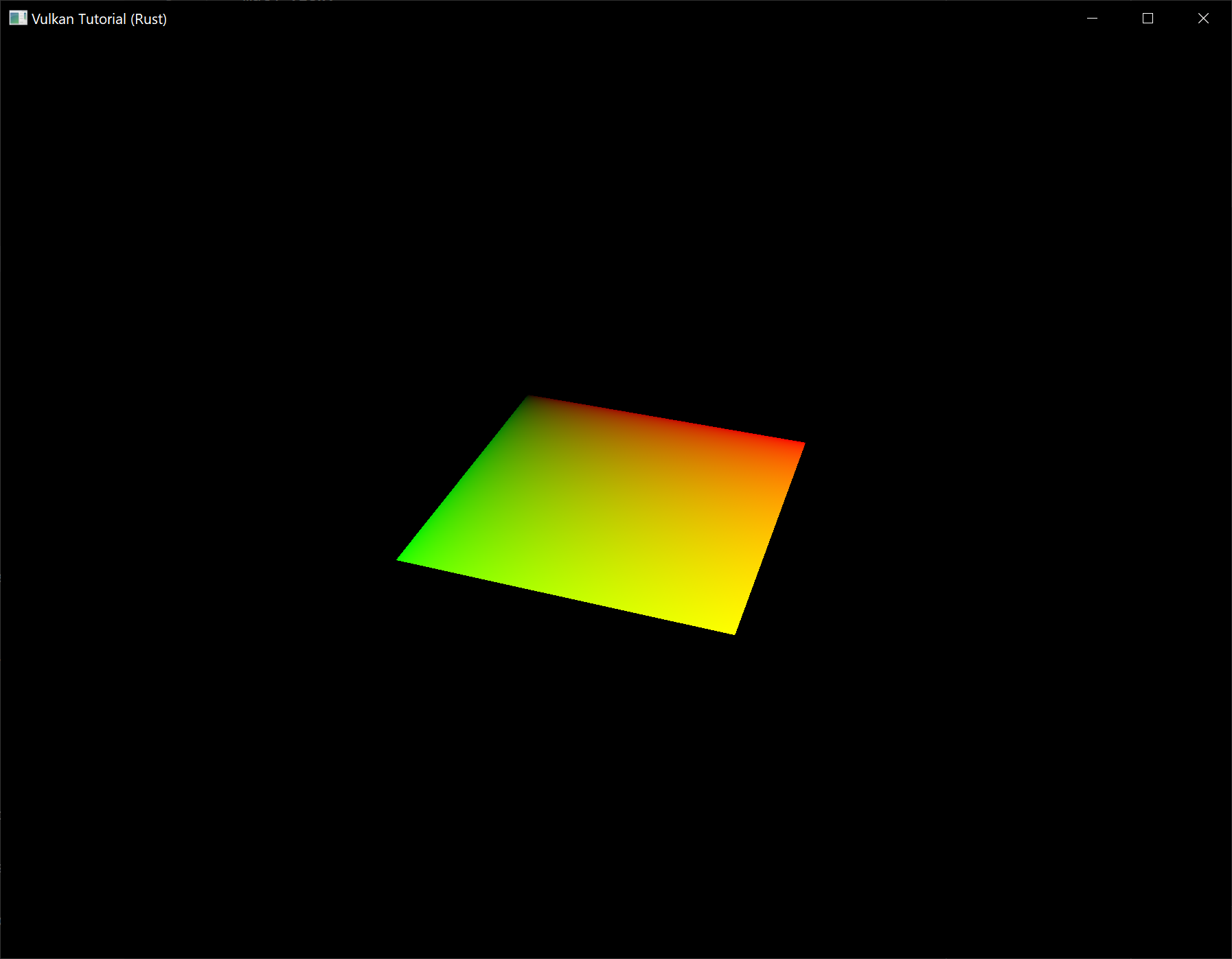

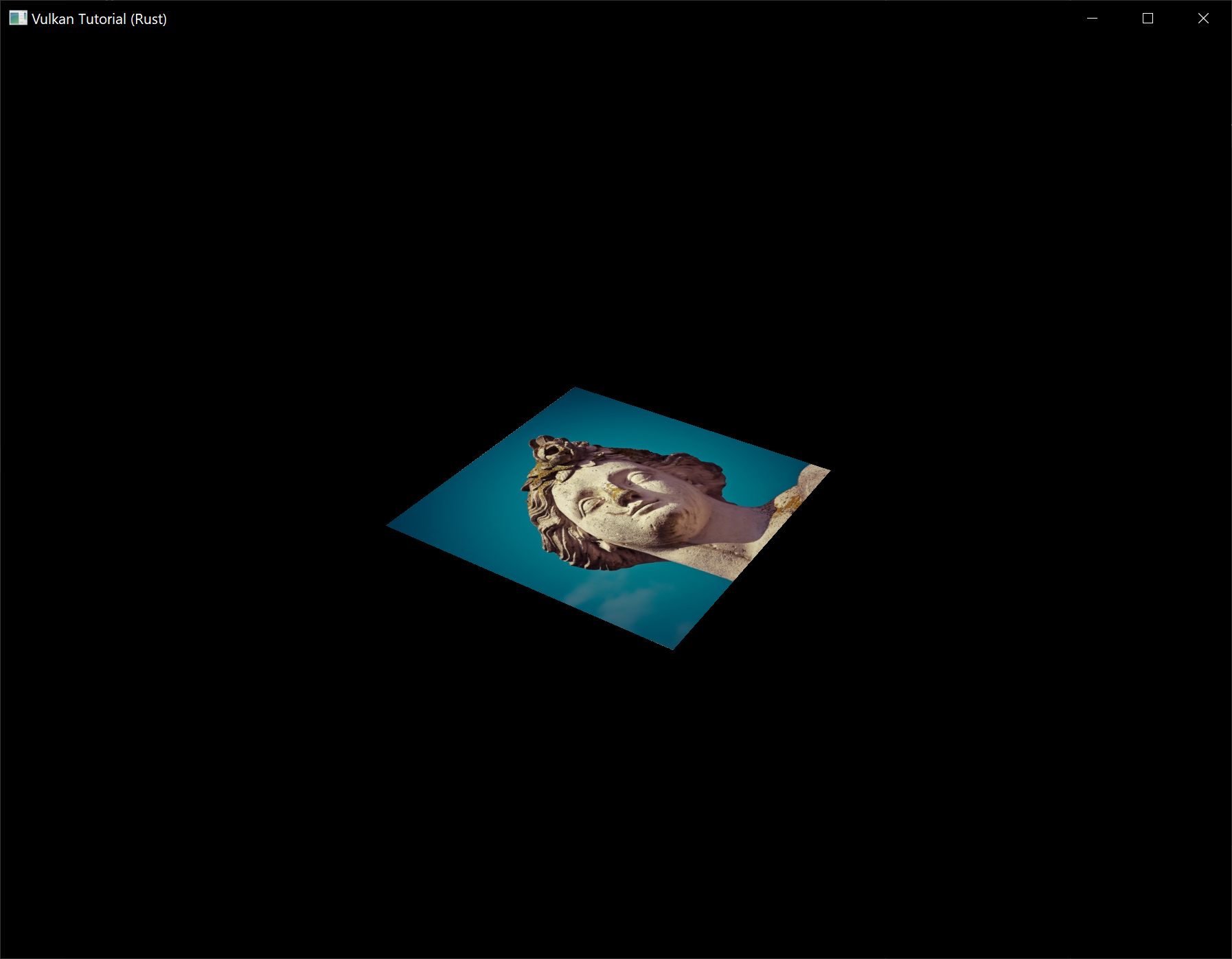

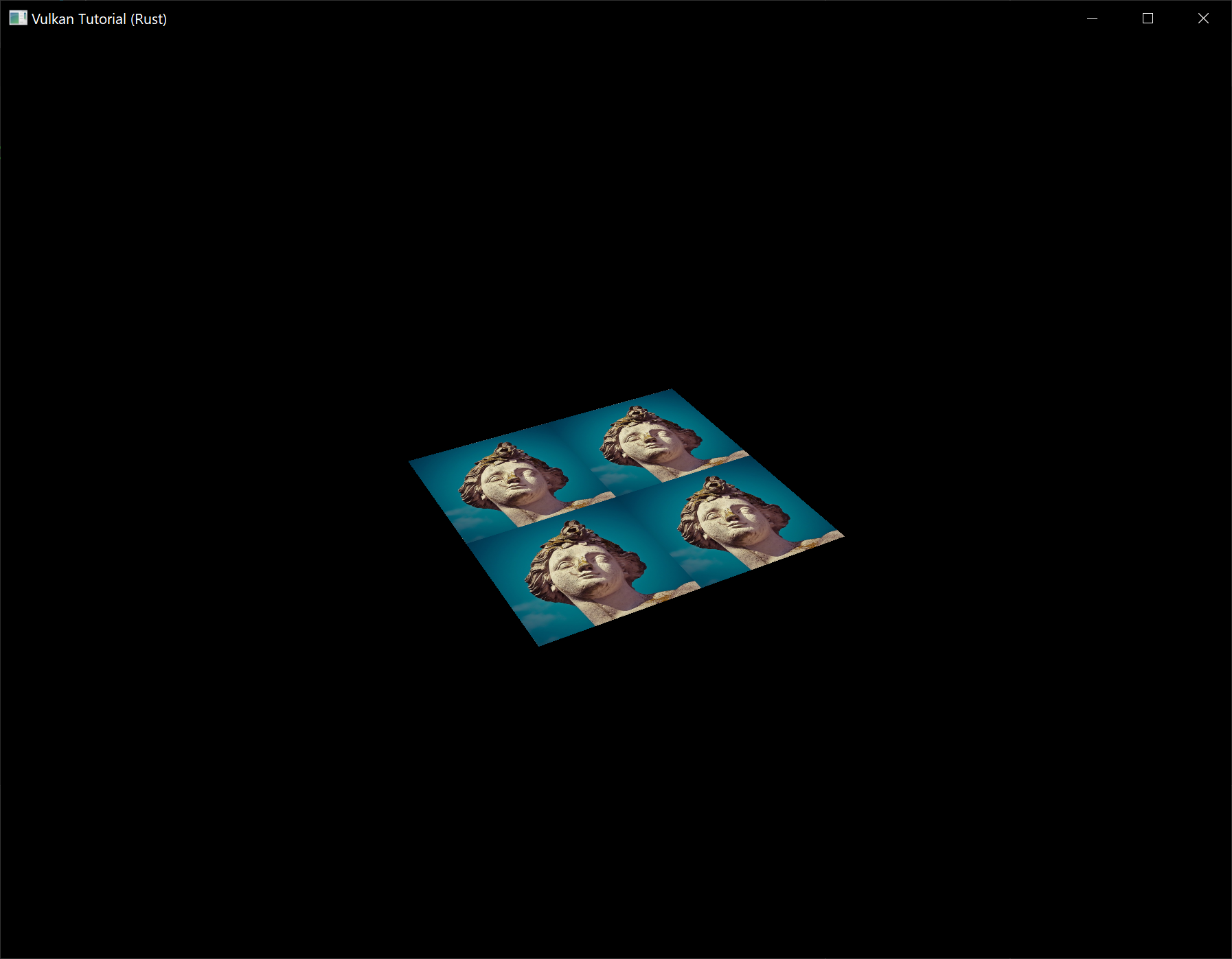

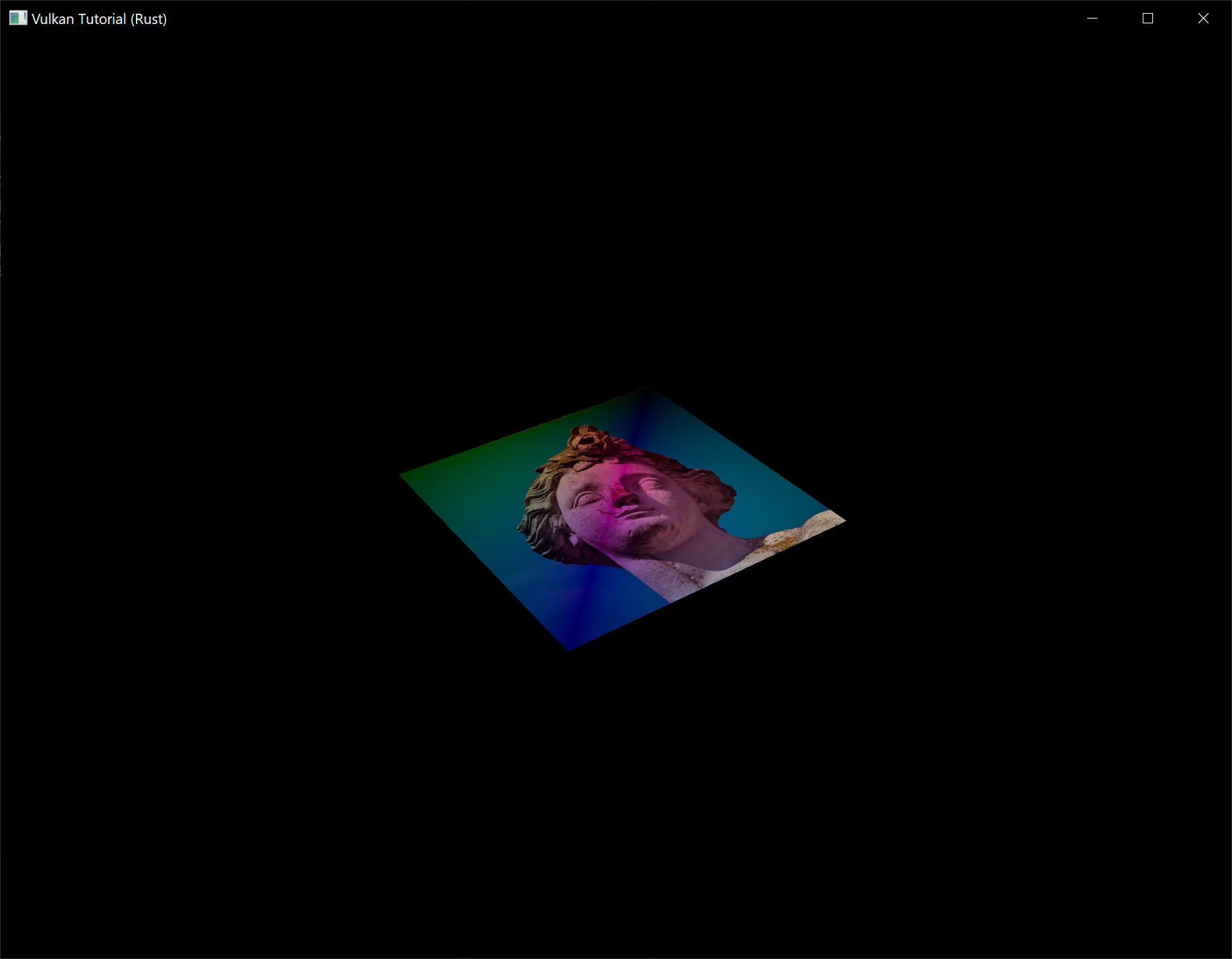

After you've gone through the ritual of drawing your very first Vulkan powered triangle onscreen, we'll start expanding the program to include linear transformations, textures and 3D models.

If you've played with graphics APIs before, then you'll know that there can be a lot of steps until the first geometry shows up on the screen. There are many of these initial steps in Vulkan, but you'll see that each of the individual steps is easy to understand and does not feel redundant. It's also important to keep in mind that once you have that boring looking triangle, drawing fully textured 3D models does not take that much extra work, and each step beyond that point is much more rewarding.

If you encounter any problems while following the tutorial, check the FAQ to see if your problem and its solution is already listed there. Next, you might find someone who had the same problem (if it is not Rust-specific) in the comment section for the corresponding chapter in the original tutorial.

Overview

This chapter will start off with an introduction of Vulkan and the problems it addresses. After that we're going to look at the ingredients that are required for the first triangle. This will give you a big picture to place each of the subsequent chapters in. We will conclude by covering the structure of the Vulkan API as implemented by vulkanalia.

Origin of Vulkan

Just like the previous graphics APIs, Vulkan is designed as a cross-platform abstraction over GPUs. The problem with most of these APIs is that the era in which they were designed featured graphics hardware that was mostly limited to configurable fixed functionality. Programmers had to provide the vertex data in a standard format and were at the mercy of the GPU manufacturers with regards to lighting and shading options.

As graphics card architectures matured, they started offering more and more programmable functionality. All this new functionality had to be integrated with the existing APIs somehow. This resulted in less than ideal abstractions and a lot of guesswork on the graphics driver side to map the programmer's intent to the modern graphics architectures. That's why there are so many driver updates for improving the performance in games, sometimes by significant margins. Because of the complexity of these drivers, application developers also need to deal with inconsistencies between vendors, like the syntax that is accepted for shaders. Aside from these new features, the past decade also saw an influx of mobile devices with powerful graphics hardware. These mobile GPUs have different architectures based on their energy and space requirements. One such example is tiled rendering, which would benefit from improved performance by offering the programmer more control over this functionality. Another limitation originating from the age of these APIs is limited multi-threading support, which can result in a bottleneck on the CPU side.

Vulkan solves these problems by being designed from scratch for modern graphics architectures. It reduces driver overhead by allowing programmers to clearly specify their intent using a more verbose API, and allows multiple threads to create and submit commands in parallel. It reduces inconsistencies in shader compilation by switching to a standardized byte code format with a single compiler. Lastly, it acknowledges the general purpose processing capabilities of modern graphics cards by unifying the graphics and compute functionality into a single API.

What it takes to draw a triangle

We'll now look at an overview of all the steps it takes to render a triangle in a well-behaved Vulkan program. All of the concepts introduced here will be elaborated on in the next chapters. This is just to give you a big picture to relate all of the individual components to.

Step 1 - Instance and physical device selection

A Vulkan application starts by setting up the Vulkan API through a VkInstance. An instance is created by describing your application and any API extensions you will be using. After creating the instance, you can query for Vulkan supported hardware and select one or more VkPhysicalDevices to use for operations. You can query for properties like VRAM size and device capabilities to select desired devices, for example to prefer using dedicated graphics cards.

Step 2 - Logical device and queue families

After selecting the right hardware device to use, you need to create a VkDevice (logical device), where you describe more specifically which VkPhysicalDeviceFeatures you will be using, like multi-viewport rendering and 64-bit floats. You also need to specify which queue families you would like to use. Most operations performed with Vulkan, like draw commands and memory operations, are asynchronously executed by submitting them to a VkQueue. Queues are allocated from queue families, where each queue family supports a specific set of operations in its queues. For example, there could be separate queue families for graphics, compute and memory transfer operations. The availability of queue families could also be used as a distinguishing factor in physical device selection. It is possible for a device with Vulkan support to not offer any graphics functionality, however all graphics cards with Vulkan support today will generally support all queue operations that we're interested in.

Step 3 - Window surface and swapchain

Unless you're only interested in offscreen rendering, you will need to create a window to present rendered images to. Windows can be created with the native platform APIs or libraries like GLFW, SDL, or the winit crate. We will be using the winit crate in this tutorial, but more about that in the next chapter.

We need two more components to actually render to a window: a window surface (VkSurfaceKHR) and a swapchain (VkSwapchainKHR). Note the KHR postfix, which means that these objects are part of a Vulkan extension. The Vulkan API itself is completely platform agnostic, which is why we need to use the standardized WSI (Window System Interface) extension to interact with the window manager. The surface is a cross-platform abstraction over windows to render to and is generally instantiated by providing a reference to the native window handle, for example HWND on Windows. However, vulkanalia has optional integration with the winit crate which we will be leveraging to handle the platform-specific details of creating a window and associated surface for us.

The swapchain is a collection of render targets. Its basic purpose is to ensure that the image that we're currently rendering to is different from the one that is currently on the screen. This is important to make sure that only complete images are shown. Every time we want to draw a frame we have to ask the swapchain to provide us with an image to render to. When we've finished drawing a frame, the image is returned to the swapchain for it to be presented to the screen at some point. The number of render targets and conditions for presenting finished images to the screen depends on the present mode. Common present modes are double buffering (vsync) and triple buffering. We'll look into these in the swapchain creation chapter.

Some platforms allow you to render directly to a display without interacting with any window manager through the VK_KHR_display and VK_KHR_display_swapchain extensions. These allow you to create a surface that represents the entire screen and could be used to implement your own window manager, for example.

Step 4 - Image views and framebuffers

To draw to an image acquired from the swapchain, we have to wrap it into a VkImageView and VkFramebuffer. An image view references a specific part of an image to be used, and a framebuffer references image views that are to be used for color, depth and stencil targets. Because there could be many different images in the swapchain, we'll preemptively create an image view and framebuffer for each of them and select the right one at draw time.

Step 5 - Render passes

Render passes in Vulkan describe the type of images that are used during rendering operations, how they will be used, and how their contents should be treated. In our initial triangle rendering application, we'll tell Vulkan that we will use a single image as color target and that we want it to be cleared to a solid color right before the drawing operation. Whereas a render pass only describes the type of images, a VkFramebuffer actually binds specific images to these slots.

Step 6 - Graphics pipeline

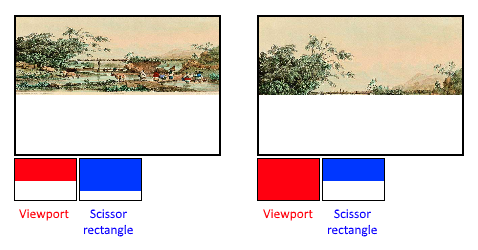

The graphics pipeline in Vulkan is set up by creating a VkPipeline object. It describes the configurable state of the graphics card, like the viewport size and depth buffer operation and the programmable state using VkShaderModule objects. The VkShaderModule objects are created from shader byte code. The driver also needs to know which render targets will be used in the pipeline, which we specify by referencing the render pass.

One of the most distinctive features of Vulkan compared to existing APIs, is that almost all configuration of the graphics pipeline needs to be set in advance. That means that if you want to switch to a different shader or slightly change your vertex layout, then you need to entirely recreate the graphics pipeline. That means that you will have to create many VkPipeline objects in advance for all the different combinations you need for your rendering operations. Only some basic configuration, like viewport size and clear color, can be changed dynamically. All of the state also needs to be described explicitly, there is no default color blend state, for example.

The good news is that because you're doing the equivalent of ahead-of-time compilation versus just-in-time compilation, there are more optimization opportunities for the driver and runtime performance is more predictable, because large state changes like switching to a different graphics pipeline are made very explicit.

Step 7 - Command pools and command buffers

As mentioned earlier, many of the operations in Vulkan that we want to execute, like drawing operations, need to be submitted to a queue. These operations first need to be recorded into a VkCommandBuffer before they can be submitted. These command buffers are allocated from a VkCommandPool that is associated with a specific queue family. To draw a simple triangle, we need to record a command buffer with the following operations:

- Begin the render pass

- Bind the graphics pipeline

- Draw 3 vertices

- End the render pass

Because the image in the framebuffer depends on which specific image the swapchain will give us, we need to record a command buffer for each possible image and select the right one at draw time. The alternative would be to record the command buffer again every frame, which is not as efficient.

Step 8 - Main loop

Now that the drawing commands have been wrapped into a command buffer, the main loop is quite straightforward. We first acquire an image from the swapchain with vkAcquireNextImageKHR. We can then select the appropriate command buffer for that image and execute it with vkQueueSubmit. Finally, we return the image to the swapchain for presentation to the screen with vkQueuePresentKHR.

Operations that are submitted to queues are executed asynchronously. Therefore we have to use synchronization objects like semaphores to ensure a correct order of execution. Execution of the draw command buffer must be set up to wait on image acquisition to finish, otherwise it may occur that we start rendering to an image that is still being read for presentation on the screen. The vkQueuePresentKHR call in turn needs to wait for rendering to be finished, for which we'll use a second semaphore that is signaled after rendering completes.

Summary

This whirlwind tour should give you a basic understanding of the work ahead for drawing the first triangle. A real-world program contains more steps, like allocating vertex buffers, creating uniform buffers and uploading texture images that will be covered in subsequent chapters, but we'll start simple because Vulkan has enough of a steep learning curve as it is. Note that we'll cheat a bit by initially embedding the vertex coordinates in the vertex shader instead of using a vertex buffer. That's because managing vertex buffers requires some familiarity with command buffers first.

So in short, to draw the first triangle we need to:

- Create a

VkInstance - Select a supported graphics card (

VkPhysicalDevice) - Create a

VkDeviceandVkQueuefor drawing and presentation - Create a window, window surface and swapchain

- Wrap the swapchain images into

VkImageView - Create a render pass that specifies the render targets and usage

- Create framebuffers for the render pass

- Set up the graphics pipeline

- Allocate and record a command buffer with the draw commands for every possible swapchain image

- Draw frames by acquiring images, submitting the right draw command buffer and returning the images back to the swapchain

It's a lot of steps, but the purpose of each individual step will be made very simple and clear in the upcoming chapters. If you're confused about the relation of a single step compared to the whole program, you should refer back to this chapter.

API concepts

The Vulkan API is defined in terms of the C programming language. The canonical version of the Vulkan API is defined in the Vulkan API Registry which is an XML file which serves as a machine readable definition of the Vulkan API.

The Vulkan headers that are part of the Vulkan SDK you will be installing in the next chapter are generated from this Vulkan API Registry. However, we will not be using these headers, directly or indirectly, because vulkanalia includes a Rust interface to the Vulkan API generated from the Vulkan API registry that is independent of the C interface provided by the Vulkan SDK.

The foundation of vulkanalia is the vulkanalia-sys crate which defines the raw types (commands, enums, bitmasks, structs, etc.) defined by the Vulkan API Registry. These raw types are re-exported from the vulkanalia crate in the vk module along with some other items generated from the Vulkan API Registry which serve as the thin wrapper around the Vulkan API previously mentioned in the introduction.

Type Names

Because Rust has support for namespaces unlike C, the vulkanalia API omits the parts of Vulkan type names that are used for namespacing purposes in C. More specifically, Vulkan types such as structs, unions, and enums lose their Vk prefix. For example, the VkInstanceCreateInfo struct becomes the InstanceCreateInfo struct in vulkanalia and can be found in the previously mentioned vk module.

Going forward, this tutorial will refer to the Vulkan types defined by vulkanalia using the vk:: module prefix to make it clear the type represents something generated from the Vulkan API Registry.

These type names will each be links to the vulkanalia documentation for the referenced type. The vulkanalia documentation for Vulkan types will also contain a link to the Vulkan specification for the type which you can use to learn more about the purpose and usage of the type.

A few type name examples:

Enums

vulkanalia models Vulkan enums as structs and models variants as associated constants for these structs. Rust enums are not used for Vulkan enums because the use of Rust enums in FFI can lead to undefined behavior.

Since associated constants are namespaced to the struct they are for, we don't need to worry about name conflicts between the values of different Vulkan enums (or enums from other libraries) like we would in C. So like with type names, vulkanalia omits the parts of variant names used for namespacing purposes.

For example, the VK_OBJECT_TYPE_INSTANCE variant is the INSTANCE value for the VkObjectType enum. In vulkanalia, this variant becomes vk::ObjectType::INSTANCE.

Bitmasks

vulkanalia models Vulkan bitmasks as structs and models bitflags as associated constants for these structs. These structs and associated constants are generated by the bitflags! macro from the bitflags crate.

Like with variants, the parts of bitmask names used for namespacing purposes are omitted.

For example, the VK_BUFFER_USAGE_TRANSFER_SRC_BIT bitflag is the TRANSFER_SRC bitflag for the VkBufferUsageFlags bitmask. In vulkanalia, this becomes vk::BufferUsageFlags::TRANSFER_SRC.

Commands

The types for raw Vulkan commands like vkCreateInstance are defined in vulkanalia as function pointer type aliases with the PFN_ (pointer to function) prefix. So the vulkanalia type alias for vkCreateInstance is vk::PFN_vkCreateInstance.

These function pointer types are not enough on their own to call Vulkan commands, we first need to load the commands described by these types. The Vulkan specification has a detailed description of how this is done, but I will present a simplified version here.

The first Vulkan command to load is vkGetInstanceProcAddr. This command is loaded in a platform-specific manner, but vulkanalia provides an optional integration with libloading that we will be using in this tutorial to load this command from a Vulkan shared library. vkGetInstanceProcAddr can be then used to load the other Vulkan commands we want to call.

However, there may be multiple versions of Vulkan commands available depending on the Vulkan implementations on your system. For example, if your system has both a dedicated NVIDIA GPU and an integrated Intel GPU, there may be separate implementations of device-specific Vulkan commands like allocate_memory for each device. In cases like this, vkGetInstanceProcAddr will return a command that will dispatch calls to the appropriate device-specific command depending on the device in use.

To avoid the runtime overhead of this dispatch, the vkGetDeviceProcAddr command can be used to directly load these device-specific Vulkan commands. This command is loaded in the same manner as vkGetInstanceProcAddr.

We will be calling dozens of Vulkan commands in this tutorial. Fortunately we won't have to load them manually, vulkanalia provides structs which can be used to easily load all the Vulkan commands in one of four categories:

vk::StaticCommands– The Vulkan commands loaded in a platform-specific manner that can then used to load the other commands (i.e.,vkGetInstanceProcAddrandvkGetDeviceProcAddr)vk::EntryCommands– The Vulkan commands loaded usingvkGetInstanceProcAddrand a null Vulkan instance. These commands are not tied to a specific Vulkan instance and are used to query instance support and create instancesvk::InstanceCommands– The Vulkan commands loaded usingvkGetInstanceProcAddrand a valid Vulkan instance. These commands are tied to a specific Vulkan instance and, among other things, are used to query device support and create devicesvk::DeviceCommands– The Vulkan commands loaded usingvkGetDeviceProcAddrand a valid Vulkan device. These commands are tied to a specific Vulkan device and expose most of the functionality you would expect from a graphics API

These structs allow you to easily load and call raw Vulkan commands from Rust, but vulkanalia offers wrappers around the raw Vulkan commands which make calling them from Rust easier and less error-prone.

Command wrappers

An example of a typical Vulkan command signature looks like this in C:

VkResult vkEnumerateInstanceExtensionProperties(

const char* pLayerName,

uint32_t* pPropertyCount,

VkExtensionProperties* pProperties

);

Someone who is familiar with the conventions of the Vulkan API could quickly see how this command is supposed to be used from this signature alone despite it not including some key information.

For those new to the Vulkan API, a look at the documentation for this command will likely be more illuminating. The description of the behavior of this command in the documentation suggests that using this command to list the available extensions for the Vulkan instance will be a multi-step process:

- Call the command to get the number of extensions

- Allocate a buffer that can contain the outputted number of extensions

- Call the command again to populate the buffer with the extensions

So in C++, this might look like this (ignoring the result of the command for simplicity):

// 1.

uint32_t pPropertyCount;

vkEnumerateInstanceExtensionProperties(NULL, &pPropertyCount, NULL);

// 2.

std::vector<VkExtensionProperties> pProperties{pPropertyCount};

// 3.

vkEnumerateInstanceExtensionProperties(NULL, &pPropertyCount, pProperties.data());

The Rust signature of the wrapper for vkEnumerateInstanceExtensionProperties looks like this:

unsafe fn enumerate_instance_extension_properties(

&self,

layer_name: Option<&CStr>,

) -> VkResult<Vec<ExtensionProperties>>;

This command wrapper makes the usage of vkEnumerateInstanceExtensionProperties from Rust easier, less error-prone, and more idiomatic in several ways:

- The optionality of the

layer_nameparameter is encoded in the function signature. That this parameter is optional is not captured in the C function signature, one would need to check the Vulkan specification for this information - The fallibility of the command is modelled by returning a

Result(VkResult<T>is a type alias forResult<T, vk::ErrorCode>). This allows us to take advantage of Rust's strong error handling capabilities as well as be warned by the compiler if we neglect to check the result of a fallible command - The command wrapper handles the three step process described above internally and returns a

Veccontaining the extension properties

Note that command wrappers are still unsafe because while vulkanalia can eliminate certain classes of errors (e.g., passing a null layer name to this command), there are still plenty of things that can go horribly wrong and cause fun things like segfaults. You can always check the Valid Usage section of the Vulkan documentation for a command to see the invariants that need to upheld to call that command validly.

You likely noticed the &self parameter in the above command wrapper. These command wrappers are defined in traits which are implemented for types exposed by vulkanalia. These traits can be separated into two categories: version traits and extension traits. The version traits offer command wrappers for the commands which are a standard part of Vulkan whereas the extension traits offer command wrappers for the commands which are defined as part of Vulkan extensions.

For example, enumerate_instance_extension_properties is in the vk::EntryV1_0 trait since it is a non-extension Vulkan command that is part of Vulkan 1.0 and not dependent on a Vulkan instance or device. A Vulkan command like cmd_draw_indirect_count that was added in Vulkan 1.2 and is dependent on a Vulkan device would be in the vk::DeviceV1_2 trait.

vk::KhrSurfaceExtensionInstanceCommands is an example of an extension trait that we will be using in future chapters to call Vulkan commands like destroy_surface_khr that are defined in the VK_KHR_surface extension. Note InstanceCommands at the end of the name of this extension trait. This suffix indicates that this extension trait provides the instance-level commands added by the VK_KHR_surface extension. Most Vulkan extensions only add commands at the instance-level or device-level, not both. However, VK_KHR_swapchain is an example of an extension that does add commands at both the instance-level and device-level, so it has two extension traits in vulkanalia: vk::KhrSwapchainExtensionInstanceCommands and vk::KhrSwapchainExtensionDeviceCommands.

These version and extension traits are defined for types which contain both the loaded commands and the required Vulkan instance or device (if any). These types have been lovingly hand-crafted and are not part of the generated Vulkan bindings in the vk module of vulkanalia. They will be used in future chapters and are the Entry, Instance, and Device structs.

Going forward, this tutorial will continue to refer to these command wrappers directly by name as in this section (e.g., create_instance). You can visit the vulkanalia documentation for the command wrapper for more information like which trait the command wrapper is defined in.

Builders

The Vulkan API heavily utilizes structs as parameters for Vulkan commands. The Vulkan structs used as command parameters have a field which indicates the type of the struct. In the C API, this field (sType) would need to be set explicitly. For example, here we are populating an instance of VkInstanceCreateInfo and then using it to call vkCreateInstance in C++:

std::vector<const char*> extensions{/* 3 extension names */};

VkInstanceCreateInfo info;

info.sType = VK_STRUCTURE_TYPE_INSTANCE_CREATE_INFO;

info.enabledExtensionCount = static_cast<uint32_t>(extensions.size());

info.ppEnabledExtensionNames = extensions.data();

VkInstance instance;

vkCreateInstance(&info, NULL, &instance);

You can still populate parameter structs in this manner when using vulkanalia, but vulkanalia provides builders which simplify the construction of these parameter structs. The vulkanalia builder for vk::InstanceCreateInfo is vk::InstanceCreateInfoBuilder. Using this builder the above code would become:

let extensions = &[/* 3 extension names */];

let info = vk::InstanceCreateInfo::builder()

.enabled_extension_names(extensions)

.build();

let instance = entry.create_instance(&info, None).unwrap();

Note the following differences:

- A value is not provided for the

s_typefield. This is because the builder provides the correct value for this field (vk::StructureType::INSTANCE_CREATE_INFO) automatically - A value is not provided for the

enabled_extension_countfield. This is because theenabled_extension_namesbuilder method uses the length of the provided slice to set this field automatically

However, the above Rust code involves a certain degree of danger. The builders have lifetimes which enforce that the references stored in them live at least as long as the builders themselves. In the above example, this means that the Rust compiler will make sure that the slice passed to the enabled_extension_names method lives at least as long as the builder. However, as soon as we call .build() to get the underlying vk::InstanceCreateInfo struct the builder lifetimes are discarded. This means that the Rust compiler can no longer prevent us from shooting ourselves in the foot if we try to dereference a pointer to a slice that no longer exists.

The following code will (hopefully) crash since the temporary Vec passed to enabled_extension_names will have been dropped by the time we call create_instance with our vk::InstanceCreateInfo struct:

let info = vk::InstanceCreateInfo::builder()

.enabled_extension_names(&vec![/* 3 extension names */])

.build();

let instance = entry.create_instance(&info, None).unwrap();

Fortunately, vulkanalia has a solution for this. Simply don't call build() and instead pass the builder to the command wrapper instead! Anywhere a Vulkan struct is expected in a command wrapper you can instead provide the associated builder. If you remove the build() call from the above code the Rust compiler will be able to use the lifetimes on the builder to reject this bad code with error[E0716]: temporary value dropped while borrowed.

Preludes

vulkanalia offers prelude modules that expose the basic types needed to use the crate. One prelude module is available per Vulkan version and each will expose the relevant command traits along with other very frequently used types:

// Vulkan 1.0

use vulkanalia::prelude::v1_0::*;

// Vulkan 1.1

use vulkanalia::prelude::v1_1::*;

// Vulkan 1.2

use vulkanalia::prelude::v1_2::*;

Validation layers

As mentioned earlier, Vulkan is designed for high performance and low driver overhead. Therefore it will include very limited error checking and debugging capabilities by default. The driver will often crash instead of returning an error code if you do something wrong, or worse, it will appear to work on your graphics card and completely fail on others.

Vulkan allows you to enable extensive checks through a feature known as validation layers. Validation layers are pieces of code that can be inserted between the API and the graphics driver to do things like running extra checks on function parameters and tracking memory management problems. The nice thing is that you can enable them during development and then completely disable them when releasing your application for zero overhead. Anyone can write their own validation layers, but the Vulkan SDK by LunarG provides a standard set of validation layers that we'll be using in this tutorial. You also need to register a callback function to receive debug messages from the layers.

Because Vulkan is so explicit about every operation and the validation layers are so extensive, it can actually be a lot easier to find out why your screen is black compared to OpenGL and Direct3D!

Development environment

In this chapter we'll set up your environment for developing Vulkan applications by installing the Vulkan SDK for your operating system. This tutorial assumes you already have a working Rust (1.88+) development environment.

Cargo project

First let's create our Cargo project:

cargo new vulkan-tutorial

After this command has executed, you'll have a folder called vulkan-tutorial containing a minimal Cargo project which produces a Rust executable.

Open the Cargo.toml file in the folder and add these dependencies in the pre-existing [dependencies] section:

anyhow = "1"

log = "0.4"

cgmath = "0.18"

png = "0.17"

pretty_env_logger = "0.5"

thiserror = "1"

tobj = { version = "3", features = ["log"] }

vulkanalia = { version = "=0.35.0", features = ["libloading", "provisional", "window"] }

winit = "0.29"

anyhow– used for simple error handlinglog– used for logging statementscgmath– used as a Rust replacement for GLM (graphics math library)png– used to load PNGs to use as texturespretty_env_logger– used to print our logs to the consolethiserror– used to define custom errors types without boilerplatetobj– used to load 3D models in the Wavefront .obj formatvulkanalia– used to call the Vulkan APIwinit– used to create a window to render to

Vulkan SDK

The most important component you'll need for developing Vulkan applications is the SDK. It includes the headers, standard validation layers, debugging tools and a loader for the Vulkan functions. The loader looks up the functions in the driver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.

Windows

The SDK can be downloaded from the LunarG website using the buttons at the bottom of the page. You don't have to create an account, but it will give you access to some additional documentation that may be useful to you.

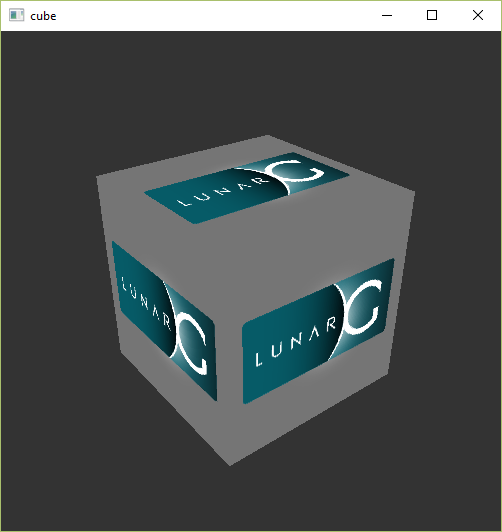

Proceed through the installation and pay attention to the install location of the SDK. The first thing we'll do is verify that your graphics card and driver properly support Vulkan. Go to the directory where you installed the SDK, open the Bin directory and run the vkcube.exe demo. You should see the following:

If you receive an error message then ensure that your drivers are up-to-date, include the Vulkan runtime and that your graphics card is supported. See the introduction chapter for links to drivers from the major vendors.

There is another program in this directory that will be useful for development. The glslangValidator.exe and glslc.exe programs will be used to compile shaders from the human-readable GLSL to bytecode. We'll cover this in depth in the shader modules chapter. The Bin directory also contains the binaries of the Vulkan loader and the validation layers, while the Lib directory contains the libraries.

Feel free to explore the other files, but we won't need them for this tutorial.

Linux

These instructions will be aimed at Ubuntu users, but you may be able to follow along by changing the apt commands to the package manager commands that are appropriate for you.

The most important components you'll need for developing Vulkan applications on Linux are the Vulkan loader, validation layers, and a couple of command-line utilities to test whether your machine is Vulkan-capable:

sudo apt install vulkan-tools– Command-line utilities, most importantlyvulkaninfoandvkcube. Run these to confirm your machine supports Vulkan.sudo apt install libvulkan-dev– Installs Vulkan loader. The loader looks up the functions in the driver at runtime, similarly to GLEW for OpenGL - if you're familiar with that.sudo apt install vulkan-validationlayers-dev– Installs the standard validation layers. These are crucial when debugging Vulkan applications, and we'll discuss them in an upcoming chapter.

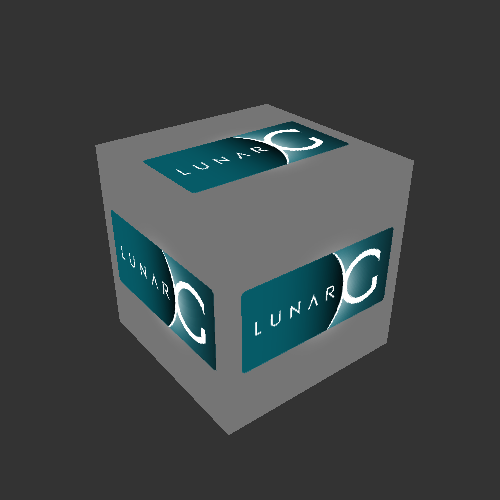

If installation was successful, you should be all set with the Vulkan portion. Remember to run vkcube and ensure you see the following pop up in a window:

If you receive an error message then ensure that your drivers are up-to-date, include the Vulkan runtime and that your graphics card is supported. See the introduction chapter for links to drivers from the major vendors.

macOS

The SDK can be downloaded from the LunarG website using the buttons at the bottom of the page. You don't have to create an account, but it will give you access to some additional documentation that may be useful to you.

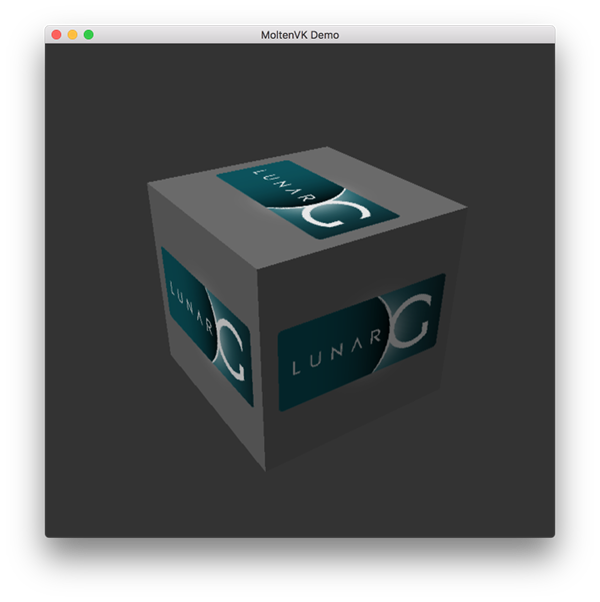

The SDK version for MacOS internally uses MoltenVK. There is no native support for Vulkan on MacOS, so what MoltenVK does is act as a layer that translates Vulkan API calls to Apple's Metal graphics framework. With this you can take advantage of debugging and performance benefits of Apple's Metal framework.

After downloading it, simply extract the contents to a folder of your choice. Inside the extracted folder, in the Applications folder you should have some executable files that will run a few demos using the SDK. Run the vkcube executable and you will see the following:

Setup Environment

When running a Vulkan application outside of the Vulkan SDK directory, you will likely also need to run the setup-env.sh script from the Vulkan SDK to avoid errors about the inability to find Vulkan libraries (e.g., libvulkan.dylib). If you installed the Vulkan SDK in the default location, this script should be located in a path like this: ~/VulkanSDK/1.3.280.1/setup-env.sh (replace the version number to match your Vulkan SDK installation).

You can also add this script to be executed by default by adding it to your shell's setup script. For example you could add a statement like this to ~/.zshrc:

source ~/VulkanSDK/1.3.280.1/setup-env.sh

FAQ

This page lists solutions to common problems that you may encounter while developing Vulkan applications.

-

(macOS) I installed the Vulkan SDK, but I get an error about

libvulkan.dylibnot being found when I run a Vulkan application - see theSetup Environmentsection of the Vulkan SDK setup instructions for macOS -

I get an access violation error in the core validation layer – Make sure that MSI Afterburner / RivaTuner Statistics Server is not running, because it has some compatibility problems with Vulkan.

-

I don't see any messages from the validation layers / Validation layers are not available – First make sure that the validation layers get a chance to print errors by keeping the terminal open after your program exits. You can do this from Visual Studio by running your program with Ctrl-F5 instead of F5, and on Linux by executing your program from a terminal window. If there are still no messages and you are sure that validation layers are turned on, then you should ensure that your Vulkan SDK is correctly installed by following the "Verify the Installation" instructions on this page. Also ensure that your SDK version is at least 1.1.106.0 to support the

VK_LAYER_KHRONOS_validationlayer. -

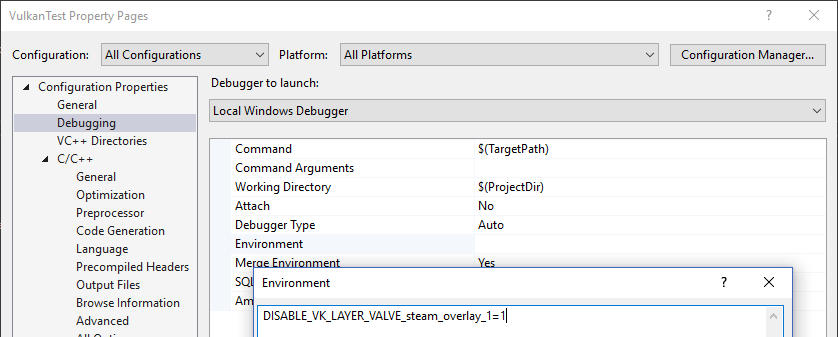

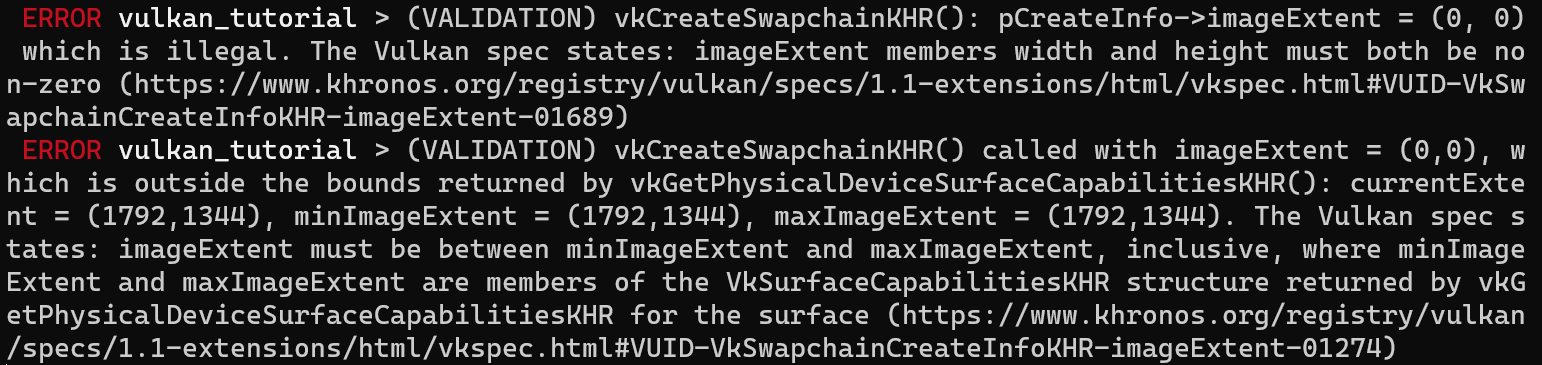

vkCreateSwapchainKHR triggers an error in SteamOverlayVulkanLayer64.dll – This appears to be a compatibility problem in the Steam client beta. There are a few possible workarounds:

- Opt out of the Steam beta program.

- Set the

DISABLE_VK_LAYER_VALVE_steam_overlay_1environment variable to1 - Delete the Steam overlay Vulkan layer entry in the registry under

HKEY_LOCAL_MACHINE\SOFTWARE\Khronos\Vulkan\ImplicitLayers

Example:

Base code

Code: main.rs

In the Development environment chapter we created a Cargo project and added the necessary dependencies. In this chapter we will be replacing the code in the src/main.rs file with the following code:

#![allow( dead_code, unsafe_op_in_unsafe_fn, unused_variables, clippy::too_many_arguments, clippy::unnecessary_wraps )] use anyhow::Result; use winit::dpi::LogicalSize; use winit::event::{Event, WindowEvent}; use winit::event_loop::EventLoop; use winit::window::{Window, WindowBuilder}; fn main() -> Result<()> { pretty_env_logger::init(); // Window let event_loop = EventLoop::new()?; let window = WindowBuilder::new() .with_title("Vulkan Tutorial (Rust)") .with_inner_size(LogicalSize::new(1024, 768)) .build(&event_loop)?; // App let mut app = unsafe { App::create(&window)? }; event_loop.run(move |event, elwt| { match event { // Request a redraw when all events were processed. Event::AboutToWait => window.request_redraw(), Event::WindowEvent { event, .. } => match event { // Render a frame if our Vulkan app is not being destroyed. WindowEvent::RedrawRequested if !elwt.exiting() => unsafe { app.render(&window) }.unwrap(), // Destroy our Vulkan app. WindowEvent::CloseRequested => { elwt.exit(); unsafe { app.destroy(); } } _ => {} } _ => {} } })?; Ok(()) } /// Our Vulkan app. #[derive(Clone, Debug)] struct App {} impl App { /// Creates our Vulkan app. unsafe fn create(window: &Window) -> Result<Self> { Ok(Self {}) } /// Renders a frame for our Vulkan app. unsafe fn render(&mut self, window: &Window) -> Result<()> { Ok(()) } /// Destroys our Vulkan app. unsafe fn destroy(&mut self) {} } /// The Vulkan handles and associated properties used by our Vulkan app. #[derive(Clone, Debug, Default)] struct AppData {}

We first import anyhow::Result so we can use anyhow's Result type for all of the fallible functions in our program. Next we import all of the winit types we need to create a window and start an event loop for that window.

Next comes our main function (which returns an anyhow::Result type). This function starts by initializing pretty_env_logger which will print our logs to the console (as shown later).

Then we create an event loop and window to render to using winit using LogicalSize which will scale the window according to the DPI of your display. If you want to know more about UI scaling you can read the relevant winit documentation.

Next we create an instance of our Vulkan app (App) and enter into our rendering loop. This loop will continually render our scene to the window until you request the window to be closed at which point the app will be destroyed and the program will exit. Checking that the event loop is not exiting (!elwt.exiting()) before rendering a frame is necessary to not keep attempting to render the scene while the app is being destroyed which would most likely result in the program crashing after attempting to access Vulkan resources that have been destroyed.

Lastly comes App and AppData. App will be used to implement the setup, rendering, and destruction logic required for the Vulkan program we will be building over the course of the following chapters. AppData will serve simply as a container for the large number of Vulkan resources we will need to create and initialize which will allow for them to be easily passed to functions to be read and/or modified. AppData implements the Default trait so we can easily construct an instance of this struct with empty/default values.

This will come in handy because many of the following chapters consist of adding a function which takes a &mut AppData and creates and initializes Vulkan resources. These functions will then be called from our App::create constructor method to set up our Vulkan app. Then, before our program exits, these Vulkan resources will be released by our App::destroy method.

A Note on Safety

All Vulkan commands, both the raw commands and their command wrappers, are marked unsafe in vulkanalia. This is because most Vulkan commands have restrictions on how they can be called that cannot be enforced by Rust (unless a higher-level interface that hides the Vulkan API is provided like in vulkano).

This tutorial will be addressing this fact by simply marking every function and method in which a Vulkan command is called as unsafe. This helps keep syntactical noise to a minimum, but in a more realistic program you may want to expose your own safe interface that enforces the invariants required for the Vulkan commands you are calling.

Resource management

Just like each chunk of memory allocated in C with malloc requires a corresponding call to free, every Vulkan object that we create needs to be explicitly destroyed when we no longer need it. In Rust it is possible to perform automatic resource management using RAII possibly combined with smart pointers like Rc or Arc. However, the author of https://vulkan-tutorial.com chose to be explicit about allocation and deallocation of Vulkan objects in this tutorial and I have decided to take the same approach. After all, Vulkan's niche is to be explicit about every operation to avoid mistakes, so it's good to be explicit about the lifetime of objects to learn how the API works.

After following this tutorial, you could implement automatic resource management by writing Rust structs that wrap Vulkan objects and release them in their Drop implementation. RAII is the recommended model for larger Vulkan programs, but for learning purposes it's always good to know what's going on behind the scenes.

Vulkan objects are either created directly with commands like create_xxx, or allocated through another object with commands like allocate_xxx. After making sure that an object is no longer used anywhere, you need to destroy it with the counterparts destroy_xxx and free_xxx. The parameters for these commands generally vary for different types of objects, but there is one parameter that they all share: allocator. This is an optional parameter that allows you to specify callbacks for a custom memory allocator. We will ignore this parameter in the tutorial and always pass None as argument.

Instance

Code: main.rs

The very first thing you will want to do is initialize the Vulkan library by creating an instance. The instance is the connection between your application and the Vulkan library and creating it involves specifying some details about your application to the driver. To get started, add the following imports:

use anyhow::{anyhow, Result};

use log::*;

use vulkanalia::loader::{LibloadingLoader, LIBRARY};

use vulkanalia::window as vk_window;

use vulkanalia::prelude::v1_0::*;

Here we first add the anyhow! macro to our imports from anyhow. This macro will be used to easily construct instances of anyhow errors. Then, we import log::* so we can use the logging macros from the log crate. Next, we import LibloadingLoader which serves as vulkanalia's libloading integration which we will use to load the initial Vulkan commands from the Vulkan shared library. The standard name of the Vulkan shared library on your operating system (e.g., vulkan-1.dll on Windows) is then imported as LIBRARY.

Next we import vulkanalia's window integration as vk_window which in this chapter we will use to enumerate the global Vulkan extensions required to render to a window. In a future chapter we will also use vk_window to link our Vulkan instance with our winit window.

Lastly we import the Vulkan 1.0 prelude from vulkanalia which will provide all of the other Vulkan-related imports we will need for this and future chapters.

Now, to create an instance we'll next have to fill in a struct with some information about our application. This data is technically optional, but it may provide some useful information to the driver in order to optimize our specific application (e.g., because it uses a well-known graphics engine with certain special behavior). This struct is called vk::ApplicationInfo and we'll create it in a new function called create_instance that takes our window and a Vulkan entry point (which we will create later) and returns a Vulkan instance:

unsafe fn create_instance(window: &Window, entry: &Entry) -> Result<Instance> {

let application_info = vk::ApplicationInfo::builder()

.application_name(b"Vulkan Tutorial\0")

.application_version(vk::make_version(1, 0, 0))

.engine_name(b"No Engine\0")

.engine_version(vk::make_version(1, 0, 0))

.api_version(vk::make_version(1, 0, 0));

}

A lot of information in Vulkan is passed through structs instead of function parameters and we'll have to fill in one more struct to provide sufficient information for creating an instance. This next struct is not optional and tells the Vulkan driver which global extensions and validation layers we want to use. Global here means that they apply to the entire program and not a specific device, which will become clear in the next few chapters. First we'll need to use vulkanalia's window integration to enumerate the required global extensions and convert them into null-terminated C strings (*const c_char):

let extensions = vk_window::get_required_instance_extensions(window)

.iter()

.map(|e| e.as_ptr())

.collect::<Vec<_>>();

With our list of required global extensions in hand we can create and return a Vulkan instance using the Vulkan entry point passed into this function:

let info = vk::InstanceCreateInfo::builder()

.application_info(&application_info)

.enabled_extension_names(&extensions);

Ok(entry.create_instance(&info, None)?)

As you'll see, the general pattern that object creation function parameters in Vulkan follow is:

- Reference to struct with creation info

- Optional reference to custom allocator callbacks, always

Nonein this tutorial

Now that we have a function to create Vulkan instances from entry points, we next need to create a Vulkan entry point. This entry point will load the Vulkan commands used to query instance support and create instances. But before we do that, let's add some fields to our App struct to store the Vulkan entry point and instance we will be creating:

struct App {

entry: Entry,

instance: Instance,

}

To populate these fields, update the App::create method to the following:

unsafe fn create(window: &Window) -> Result<Self> {

let loader = LibloadingLoader::new(LIBRARY)?;

let entry = Entry::new(loader).map_err(|b| anyhow!("{}", b))?;

let instance = create_instance(window, &entry)?;

Ok(Self { entry, instance })

}

Here we first create a Vulkan function loader which will be used to load the initial Vulkan commands from the Vulkan shared library. Next we create the Vulkan entry point using the function loader which will load all of the commands we need to manage Vulkan instances. Lastly we are now able to call our create_instance function with the Vulkan entry point.

Cleaning up

The Instance should only be destroyed right before the program exits. It can be destroyed in the App::destroy method using destroy_instance:

unsafe fn destroy(&mut self) {

self.instance.destroy_instance(None);

}

Like the Vulkan commands used to create objects, the commands used to destroy objects also take an optional reference to custom allocator callbacks. So like before, we pass None to indicate we are content with the default allocation behavior.

Non-conformant Vulkan implementations

Not every platform is so fortunate to have an implementation of the Vulkan API that fully conforms to the Vulkan specification. On such a platform, there may be standard Vulkan features that are not available and/or there may be significant differences between the actual behavior of a Vulkan application using that non-conformant implementation and what the Vulkan specification says that application should behave.

Since version 1.3.216 of the Vulkan SDK, applications that use a non-conformant Vulkan implementation must enable some additional Vulkan extensions. These compatibility extensions have the primary purpose of forcing the developer to acknowledge that their application is using a non-conformant implementation of Vulkan and that they should not expect everything to be as the Vulkan specification says it should be.

This tutorial will be utilizing these compatibility Vulkan extensions so that your application can run even on platforms that lack a fully conforming Vulkan implementation.

However, you might ask "Why are we doing this? Do we really need to worry about supporting niche platforms in an introductory Vulkan tutorial?" As it turns out, the not-so-niche macOS is among those platforms that lack a fully-conformant Vulkan implementation.

As was mentioned in the introduction, Apple has their own low-level graphics API, Metal. The Vulkan implementation that is provided as part of the Vulkan SDK for macOS (MoltenVK) is a layer that sits in-between your application and Metal and translates the Vulkan API calls your application makes into Metal API calls. Because MoltenVK is not fully conformant with the Vulkan specification, you will need to enable the compatibility Vulkan extensions we've been talking about to support macOS.

As an aside, while MoltenVK is not fully-conformant, you shouldn't encounter any issues caused by deviations from the Vulkan specification while following this tutorial on macOS.

Enabling compatibility extensions

Note: Even if you are not following this tutorial on a macOS, some of the code added in this section is referenced in the remainder of this tutorial so you can't just skip it!

We'll want to check if the version of Vulkan we are using is equal to or greather than the version of Vulkan that introduced the compatibility extension requirement. With this goal in mind, we'll first add an additional import:

use vulkanalia::Version;

With this new import in place, we'll define a constant for the minimum version:

const PORTABILITY_MACOS_VERSION: Version = Version::new(1, 3, 216);

Replace the extension enumeration and instance creation code with the following:

let mut extensions = vk_window::get_required_instance_extensions(window)

.iter()

.map(|e| e.as_ptr())

.collect::<Vec<_>>();

// Required by Vulkan SDK on macOS since 1.3.216.

let flags = if

cfg!(target_os = "macos") &&

entry.version()? >= PORTABILITY_MACOS_VERSION

{

info!("Enabling extensions for macOS portability.");

extensions.push(vk::KHR_GET_PHYSICAL_DEVICE_PROPERTIES2_EXTENSION.name.as_ptr());

extensions.push(vk::KHR_PORTABILITY_ENUMERATION_EXTENSION.name.as_ptr());

vk::InstanceCreateFlags::ENUMERATE_PORTABILITY_KHR

} else {

vk::InstanceCreateFlags::empty()

};

let info = vk::InstanceCreateInfo::builder()

.application_info(&application_info)

.enabled_extension_names(&extensions)

.flags(flags);

This code enables KHR_PORTABILITY_ENUMERATION_EXTENSION if your application is being compiled for a platform that lacks a conformant Vulkan implementation (just checking for macOS here) and the Vulkan version meets or exceeds the minimum version we just defined.

This code also enables KHR_GET_PHYSICAL_DEVICE_PROPERTIES2_EXTENSION under the same conditions. This extension is needed to enable the KHR_PORTABILITY_SUBSET_EXTENSION device extension which will be added later in the tutorial when we set up a logical device.

Instance vs vk::Instance

When we call our create_instance function, what we get back is not a raw Vulkan instance as would be returned by the Vulkan command vkCreateInstance (vk::Instance). Instead what we got back is a custom type defined by vulkanalia which combines both a raw Vulkan instance and the commands loaded for that specific instance.

This is the Instance type we have been using (imported from the vulkanalia prelude) which should not be confused with the vk::Instance type which represents a raw Vulkan instance. In future chapters we will also use the Device type which, like Instance, is a pairing of a raw Vulkan device (vk::Device) and the commands loaded for that specific device. Fortunately we will not be using vk::Instance or vk::Device directly in this tutorial so you don't need to worry about getting them mixed up.

Because an Instance contains both a Vulkan instance and the associated commands, the command wrappers implemented for an Instance are able to provide the Vulkan instance when it is required by the underlying Vulkan command.

If you look at the documentation for the vkDestroyInstance command, you will see that it takes two parameters: the instance to destroy and the optional custom allocator callbacks. However, destroy_instance only takes the optional custom allocator callbacks because it is able to provide the raw Vulkan handle as the first parameter itself as described above.

Before continuing with the more complex steps after instance creation, it's time to evaluate our debugging options by checking out validation layers.

Validation layers

Code: main.rs

The Vulkan API is designed around the idea of minimal driver overhead and one of the manifestations of that goal is that there is very limited error checking in the API by default. Even mistakes as simple as setting enumerations to incorrect values are generally not explicitly handled and will simply result in crashes or undefined behavior. Because Vulkan requires you to be very explicit about everything you're doing, it's easy to make many small mistakes like using a new GPU feature and forgetting to request it at logical device creation time.

However, that doesn't mean that these checks can't be added to the API. Vulkan introduces an elegant system for this known as validation layers. Validation layers are optional components that hook into Vulkan function calls to apply additional operations. Common operations in validation layers are:

- Checking the values of parameters against the specification to detect misuse

- Tracking creation and destruction of objects to find resource leaks

- Checking thread safety by tracking the threads that calls originate from

- Logging every call and its parameters to the standard output

- Tracing Vulkan calls for profiling and replaying

Here's an example of what the implementation of a function in a diagnostics validation layer could look like (in C):

VkResult vkCreateInstance(

const VkInstanceCreateInfo* pCreateInfo,

const VkAllocationCallbacks* pAllocator,

VkInstance* instance

) {

if (pCreateInfo == nullptr || instance == nullptr) {

log("Null pointer passed to required parameter!");

return VK_ERROR_INITIALIZATION_FAILED;

}

return real_vkCreateInstance(pCreateInfo, pAllocator, instance);

}

These validation layers can be freely stacked to include all the debugging functionality that you're interested in. You can simply enable validation layers for debug builds and completely disable them for release builds, which gives you the best of both worlds!

Vulkan does not come with any validation layers built-in, but the LunarG Vulkan SDK provides a nice set of layers that check for common errors. They're also completely open source, so you can check which kind of mistakes they check for and contribute. Using the validation layers is the best way to avoid your application breaking on different drivers by accidentally relying on undefined behavior.

Validation layers can only be used if they have been installed onto the system. For example, the LunarG validation layers are only available on PCs with the Vulkan SDK installed.

There were formerly two different types of validation layers in Vulkan: instance and device specific. The idea was that instance layers would only check calls related to global Vulkan objects like instances, and device specific layers would only check calls related to a specific GPU. Device specific layers have now been deprecated, which means that instance validation layers apply to all Vulkan calls. The specification document still recommends that you enable validation layers at device level as well for compatibility, which is required by some implementations. We'll simply specify the same layers as the instance at logical device level, which we'll see later on.

Before we get started, we'll need some new imports for this chapter:

use std::collections::HashSet;

use std::ffi::CStr;

use std::os::raw::c_void;

// Note: This trait was called `ExtDebugUtilsExtension` in versions of `vulkanalia` prior to `v0.31.0`.

use vulkanalia::vk::ExtDebugUtilsExtensionInstanceCommands;

HashSet will be used for storing and querying supported layers and the other imports will be used in the function we will be writing to log messages from the validation layer with the exception of vk::ExtDebugUtilsExtensionInstanceCommands which provides the command wrappers for managing debugging functionality.

Using validation layers

In this section we'll see how to enable the standard diagnostics layers provided by the Vulkan SDK. Just like extensions, validation layers need to be enabled by specifying their name. All of the useful standard validation is bundled into a layer included in the SDK that is known as VK_LAYER_KHRONOS_validation.

Let's first add two configuration variables to the program to specify the layers to enable and whether to enable them or not. I've chosen to base that value on whether the program is being compiled in debug mode or not.

const VALIDATION_ENABLED: bool =

cfg!(debug_assertions);

const VALIDATION_LAYER: vk::ExtensionName =

vk::ExtensionName::from_bytes(b"VK_LAYER_KHRONOS_validation");

We'll add some new code to our create_instance function that collects the supported instance layers into a HashSet, checks that the validation layer is available, and creates a list of layer names containing the validation layer. This code should go right below where the vk::ApplicationInfo struct is built:

let available_layers = entry

.enumerate_instance_layer_properties()?

.iter()

.map(|l| l.layer_name)

.collect::<HashSet<_>>();

if VALIDATION_ENABLED && !available_layers.contains(&VALIDATION_LAYER) {

return Err(anyhow!("Validation layer requested but not supported."));

}

let layers = if VALIDATION_ENABLED {

vec![VALIDATION_LAYER.as_ptr()]

} else {

Vec::new()

};

Then you'll need to specify the requested layers in vk::InstanceCreateInfo by adding a call to the enabled_layer_names builder method:

let info = vk::InstanceCreateInfo::builder()

.application_info(&application_info)

.enabled_layer_names(&layers)

.enabled_extension_names(&extensions)

.flags(flags);

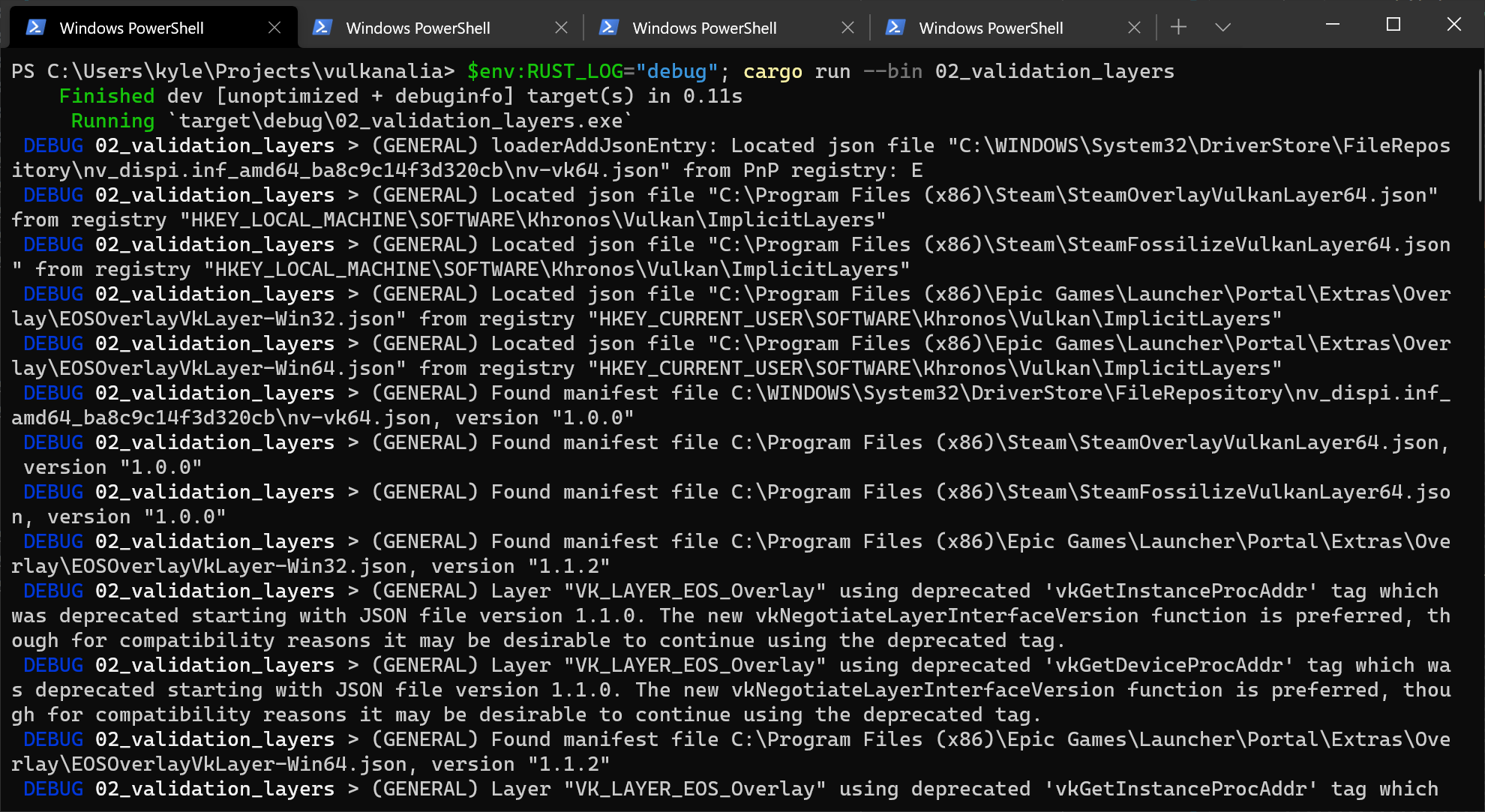

Now run the program in debug mode and ensure that the Validation layer requested but not supported. error does not occur. If it does, then have a look at the FAQ. If you get past that check, then create_instance should never return a vk::ErrorCode::LAYER_NOT_PRESENT error code but you should still run the program to be sure.

Message callback

The validation layers will print debug messages to the standard output by default, but we can also handle them ourselves by providing an explicit callback in our program. This will also allow you to decide which kind of messages you would like to see, because not all are necessarily (fatal) errors. If you don't want to do that right now then you may skip to the last section in this chapter.

To set up a callback in the program to handle messages and the associated details, we have to set up a debug messenger with a callback using the VK_EXT_debug_utils extension.

We'll add some more code to our create_instance function. This time we'll modify the extensions list to be mutable and then add the debug utilities extension to the list when the validation layer is enabled:

let mut extensions = vk_window::get_required_instance_extensions(window)

.iter()

.map(|e| e.as_ptr())

.collect::<Vec<_>>();

if VALIDATION_ENABLED {

extensions.push(vk::EXT_DEBUG_UTILS_EXTENSION.name.as_ptr());

}

vulkanalia provides a collection of metadata for each Vulkan extension. In this case we just need the name of the extension to load, so we add the value of the name field of the vk::EXT_DEBUG_UTILS_EXTENSION struct constant to our list of desired extension names.

Run the program to make sure you don't receive a vk::ErrorCode::EXTENSION_NOT_PRESENT error code. We don't really need to check for the existence of this extension, because it should be implied by the availability of the validation layers.

Now let's see what a debug callback function looks like. Add a new extern "system" function called debug_callback that matches the vk::PFN_vkDebugUtilsMessengerCallbackEXT prototype. The extern "system" is necessary to allow Vulkan to call our Rust function.

extern "system" fn debug_callback(

severity: vk::DebugUtilsMessageSeverityFlagsEXT,

type_: vk::DebugUtilsMessageTypeFlagsEXT,

data: *const vk::DebugUtilsMessengerCallbackDataEXT,

_: *mut c_void,

) -> vk::Bool32 {

let data = unsafe { *data };

let message = unsafe { CStr::from_ptr(data.message) }.to_string_lossy();

if severity >= vk::DebugUtilsMessageSeverityFlagsEXT::ERROR {

error!("({:?}) {}", type_, message);

} else if severity >= vk::DebugUtilsMessageSeverityFlagsEXT::WARNING {

warn!("({:?}) {}", type_, message);

} else if severity >= vk::DebugUtilsMessageSeverityFlagsEXT::INFO {

debug!("({:?}) {}", type_, message);

} else {

trace!("({:?}) {}", type_, message);

}

vk::FALSE

}

The first parameter specifies the severity of the message, which is one of the following flags:

vk::DebugUtilsMessageSeverityFlagsEXT::VERBOSE– Diagnostic messagevk::DebugUtilsMessageSeverityFlagsEXT::INFO– Informational message like the creation of a resourcevk::DebugUtilsMessageSeverityFlagsEXT::WARNING– Message about behavior that is not necessarily an error, but very likely a bug in your applicationvk::DebugUtilsMessageSeverityFlagsEXT::ERROR– Message about behavior that is invalid and may cause crashes

The values of this enumeration are set up in such a way that you can use a comparison operation to check if a message is equal or worse compared to some level of severity which we use here to decide on which log macro is appropriate to use when logging the message.

The type_ parameter can have the following values:

vk::DebugUtilsMessageTypeFlagsEXT::GENERAL– Some event has happened that is unrelated to the specification or performancevk::DebugUtilsMessageTypeFlagsEXT::VALIDATION– Something has happened that violates the specification or indicates a possible mistakevk::DebugUtilsMessageTypeFlagsEXT::PERFORMANCE– Potential non-optimal use of Vulkan

The data parameter refers to a vk::DebugUtilsMessengerCallbackDataEXT struct containing the details of the message itself, with the most important members being:

message– The debug message as a null-terminated string (*const c_char)objects– Array of Vulkan object handles related to the messageobject_count– Number of objects in array

Finally, the last parameter, here ignored as _, contains a pointer that was specified during the setup of the callback and allows you to pass your own data to it.

The callback returns a (Vulkan) boolean that indicates if the Vulkan call that triggered the validation layer message should be aborted. If the callback returns true, then the call is aborted with the vk::ErrorCode::VALIDATION_FAILED_EXT error code. This is normally only used to test the validation layers themselves, so you should always return vk::FALSE.

All that remains now is telling Vulkan about the callback function. Perhaps somewhat surprisingly, even the debug callback in Vulkan is managed with a handle that needs to be explicitly created and destroyed. Such a callback is part of a debug messenger and you can have as many of them as you want. Add a field to the AppData struct:

struct AppData {

messenger: vk::DebugUtilsMessengerEXT,

}

Now modify the signature and end of the create_instance function to look like this:

unsafe fn create_instance(

window: &Window,

entry: &Entry,

data: &mut AppData

) -> Result<Instance> {

// ...

let instance = entry.create_instance(&info, None)?;

if VALIDATION_ENABLED {

let debug_info = vk::DebugUtilsMessengerCreateInfoEXT::builder()

.message_severity(vk::DebugUtilsMessageSeverityFlagsEXT::all())

.message_type(

vk::DebugUtilsMessageTypeFlagsEXT::GENERAL

| vk::DebugUtilsMessageTypeFlagsEXT::VALIDATION

| vk::DebugUtilsMessageTypeFlagsEXT::PERFORMANCE,

)

.user_callback(Some(debug_callback));

data.messenger = instance.create_debug_utils_messenger_ext(&debug_info, None)?;

}

Ok(instance)

}

Note: Calling the

allstatic method on a set of Vulkan flags (e.g.,vk::DebugUtilsMessageSeverityFlagsEXT::all()as in the above code) will, as the name implies, return a set of flags containing all of the flags of that type known byvulkanalia. A complete set of flags may contain flags that are only valid when certain extensions are enabled or flags added by a newer version of Vulkan than the one you are using/targeting.In the above code we've explicitly listed the

vk::DebugUtilsMessageTypeFlagsEXTflags we want because that set of flags contains a flag (vk::DebugUtilsMessageTypeFlagsEXT::DEVICE_ADDRESS_BINDING) that is only valid when a certain extension is enabled.In most cases using unsupported flags shouldn't cause any errors or changes in the behavior of your application, but it definitely will result in validation errors if you have the validation layers enabled (as we are aiming to do in this chapter).

We have first extracted our Vulkan instance out of the return expression so we can use it to add our debug callback.

Then we construct a vk::DebugUtilsMessengerCreateInfoEXT struct which provides information about our debug callback and how it will be called.

The message_severity field allows you to specify all the types of severities you would like your callback to be called for. I've requested that messages of all severity be included. This would normally produce a lot of verbose general debug info but we can filter that out using a log level when we are not interested in it.

Similarly the message_type field lets you filter which types of messages your callback is notified about. I've simply enabled all types here. You can always disable some if they're not useful to you.

Finally, the user_callback field specifies the callback function. You can optionally pass a mutable reference to the user_data field which will be passed along to the callback function via the final parameter. You could use this to pass a pointer to the AppData struct, for example.

Lastly we call create_debug_utils_messenger_ext to register our debug callback with the Vulkan instance.

Since our create_instance function takes an AppData reference now, we'll also need to update App and App::create:

Note:

AppData::default()will use the implementation of theDefaulttrait generated by the presence of#[derive(Default)]on theAppDatastruct. This will result in containers likeVecbeing initialized to empty lists and Vulkan handles likevk::DebugUtilsMessengerEXTbeing initialized to null handles. If Vulkan handles are not initialized properly before they are used, the validation layers we are enabling in this chapter should let us know exactly what we missed.

struct App {

entry: Entry,

instance: Instance,

data: AppData,

}

impl App {

unsafe fn create(window: &Window) -> Result<Self> {

// ...

let mut data = AppData::default();

let instance = create_instance(window, &entry, &mut data)?;

Ok(Self { entry, instance, data })

}

}

The vk::DebugUtilsMessengerEXT object we created needs to cleaned up before our app exits. We'll do this in App::destroy before we destroy the instance:

unsafe fn destroy(&mut self) {

if VALIDATION_ENABLED {

self.instance.destroy_debug_utils_messenger_ext(self.data.messenger, None);

}

self.instance.destroy_instance(None);

}

Debugging instance creation and destruction

Although we've now added debugging with validation layers to the program we're not covering everything quite yet. The create_debug_utils_messenger_ext call requires a valid instance to have been created and destroy_debug_utils_messenger_ext must be called before the instance is destroyed. This currently leaves us unable to debug any issues in the create_instance and destroy_instance calls.

However, if you closely read the extension documentation, you'll see that there is a way to create a separate debug utils messenger specifically for those two function calls. It requires you to simply pass a pointer to a vk::DebugUtilsMessengerCreateInfoEXT struct in the next extension field of vk::InstanceCreateInfo. Before we do this, let's first discuss how extending structs works in Vulkan.

The s_type field that is present on many Vulkan structs was briefly mentioned in the Builders section of the Overview chapter. It was said that this field must be set to the vk::StructureType variant indicating the type of the struct (e.g., vk::StructureType::APPLICATION_INFO for a vk::ApplicationInfo struct).

You may have wondered what the purpose of this field is: doesn't Vulkan already know the type of structs passed to its commands? The purpose of this field is wrapped up with the purpose of the next field that always accompanies the s_type field in Vulkan structs: the ability to extend a Vulkan struct with other Vulkan structs.

The next field in a Vulkan struct may be used to specify a structure pointer chain. next can be either be null or a pointer to a Vulkan struct that is permitted by Vulkan to extend the struct. Each struct in this chain of structs is used to provide additional information to the Vulkan command the root structure is passed to. This feature of Vulkan allows for extending the functionality of Vulkan commands without breaking backwards compabilitity.

When you pass such a chain of structs to a Vulkan command, it must iterate through the structs to collect all of the information from the structs. Because of this, Vulkan can't know the type of each structure in the chain, hence the need for the s_type field.

The builders provided by vulkanalia allow for easily building these pointer chains in a type-safe manner. For example, take a look at the vk::InstanceCreateInfoBuilder builder, specifically the push_next method. This method allows adding any Vulkan struct for which the vk::ExtendsInstanceCreateInfo trait is implemented for to the pointer chain for a vk::InstanceCreateInfo.

One such struct is vk::DebugUtilsMessengerCreateInfoEXT, which we will now use to extend our vk::InstanceCreateInfo struct to set up our debug callback. To do this we'll continue to modify our create_instance function. This time we'll make the info struct mutable so we can modify its pointer chain before moving the debug_info struct, now also mutable, below it so we can push it onto info's pointer chain:

let mut info = vk::InstanceCreateInfo::builder()

.application_info(&application_info)

.enabled_layer_names(&layers)

.enabled_extension_names(&extensions)

.flags(flags);

let mut debug_info = vk::DebugUtilsMessengerCreateInfoEXT::builder()

.message_severity(vk::DebugUtilsMessageSeverityFlagsEXT::all())

.message_type(

vk::DebugUtilsMessageTypeFlagsEXT::GENERAL

| vk::DebugUtilsMessageTypeFlagsEXT::VALIDATION

| vk::DebugUtilsMessageTypeFlagsEXT::PERFORMANCE,

)

.user_callback(Some(debug_callback));

if VALIDATION_ENABLED {

info = info.push_next(&mut debug_info);

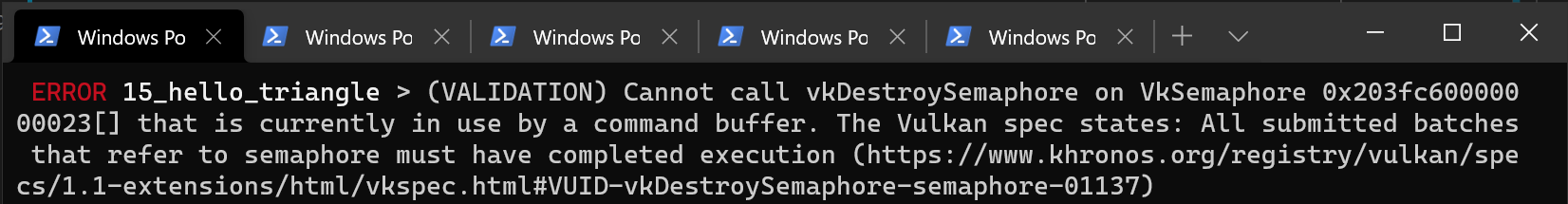

}